The Challenge

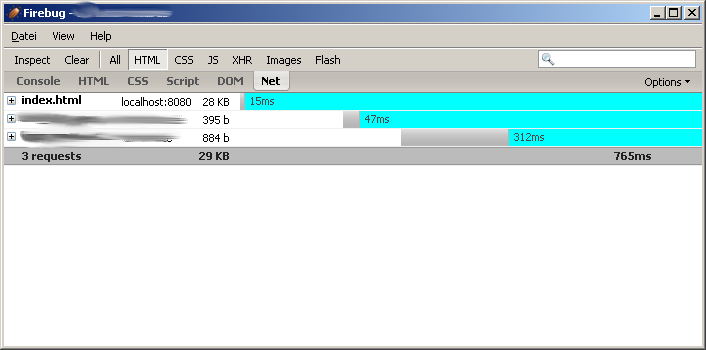

OK, if anybody et al has read this blog up to now, you might have asked yourself why we do the funny things we do and what it’s all about with this 16 millisecods and stuff.

We are a small team of (right now) three software developers which were assigned to replace a PHP web application which is now up and running for about 10 years with a new one written in Java. The website itself, despite the underlying technology is quite successful, right now only present in the german market, and has more than 100 million page impressions per month, by more than 13 million visits, attracting more than 600.000 uniqe users in the same time period. OK, it’s not Google, but it’s not that small either.

The former PHP website isn’t that bad. It does what it is ment to do. It’s pretty fast. So why the rewrite?

As often it all comes down to TCO. The basic principles of the PHP webapp aren’t bad and even aren’t that outdated, for a ten year old vessel, but over time, with a lot of different maintainers and no good documentation or big picture, software degrades and becomes a pain in the ass to maintain. Thus the decision for the rewrite was born, with the main goals of easier (read: cheaper) maintenance and as least as fast, or not this much slower than the current website.

As you might see from the previous posts the general feeling right now is quite good, but we will see in the next few days/weeks when QA starts testing the thing. Knock-on-wood.

Blurps